This article will explore OpenAI's GPT-3 model for developing a chatbot.

What are GPT-3 and OpenAI?

GPT-3 is the third generation of OpenAI's Generative Pretrained Transformer (GPT) model. It is a large-scale neural network that can be used to generate text. OpenAI is an artificial intelligence research laboratory. They sell the trained AI models through a (paid) API. OpenAI was founded in December 2015 by SpaceX co-founder and Tesla CEO Elon Musk, and Greg Brockman from recognized payment provider Stripe.

The only operation GPT-3 can do for you is to complete a provided text. If you give it the start of a sentence, it can predict the next word. Or, if you give it the beginning of a paragraph, it can predict the following few words. Given enough text, it can generate an entirely new piece of writing. The most sophisticated model (Da Vinci) has enough intelligence to answer real-world questions. For example:

I left my keys in the house, but the door was locked while I was standing outside. What should I do?

Which respond with:

If you have a spare key, you can use that to enter your house. If you do not have a spare key, you can try asking a neighbor for help, or you can call a locksmith.

Fair enough. It might even solve math questions. For example:

We have x^2 + 7*x + 12 = 0. What is x?

And GPT-3 completes the text with "x = -4 or x = -3”, which is indeed the correct answer. Now we add the text "Explain your answer.” and run again. It responds with:

The discriminant of this equation is b^2-4ac, which is equal to 7^2-4(1)(12), or 49–48, which is equal to 1. This means that there are two real roots, which are -4 and -3.

Looks smart but not quite right. We can say that we have distinct real roots, given the positive discriminant, but not what they are. However, it understood the command well. It recognized that it's a quadratic, that it can be solved using the quadratic formula, and it gives the correct answer. That is more than what I would expect from a random stranger. Hence it's an impressive result for an AI model that is not specifically trained to answer math questions.

You can't simply run GPT-3 on your own machine. Inference requires 350GB of GPU memory, and training costs millions of GPU capacity alone. However, OpenAI provides an easy-to-use API for GPT-3 that responds faster than you can talk. It is pay-as-you-go without upfront investments. How cool is that?

Why use GPT for chatbots?

We want our chatbot to answer questions related to our business, such as questions on invoices or general support questions. However, the GPT-3 model is not trained for our company. Therefore, the answers it will provide for questions look valid but are nonsense. An example question:

We want to purchase licenses for the product, but our corporate software management team needs a link and contact information. Can you please provide this information? Thank you,

Without any context, we ask GPT-3 to complete this text, and it responds as follows:

The contact information for our sales team is as follows:

Email: [sales@***.com](mailto:sales@scirra.com)

Phone: +44 (0)330 *** ****

I removed part of the mail address and phone number because they are real but not ours. Responding with some random mail and phone number is definitely not what we want our chatbot to do. So, what do we do instead?

A reliable way to get answers is to use the prompt design, specifically with multiple choice questions. A typical input is:

I would like to purchase additional 20 licenses. How do I go about it?What is this about?

A. Trial period

B. Subscriptions and billing

C. Request for documentation

D. Feature request

E. Bug reportAnswer letter:

GPT-3 completes this input with "B. Subscription and billing”.

Although GPT-3 will not write correct answers for our chatbot, it can provide helpful information to proceed in the chat flow. However, we might also get these results from a simple Naive Bayes model. The main reason to choose GPT-3 is that it doesn't require training, and hence no training data, to be valuable.

The architecture of a chatbot

Conversational AI services (such as Amazon Lex) require a structure of the conversations to process. The elementary parts of these flows are intents and forms. An intent defines what the user wants, such as upgrading their subscription. That might lead to the "update subscription form”. That form specifies what information is required. The chatbot recognizes the intent, collects all information for the form, and verifies the request with the user.

We can use the prompt design to get the intention of the user. It got 9 out of 10 right on a quick test with real support requests. A few takeaways from our experience:

- Specify what kind of answer is expected (e.g., a letter).

- Use letters instead of numbers for the answer options. Numbers in the question may confuse the model.

- Do not include too many options. Results get worse when more than five options are presented.

- Including an "Other” option does not work. It's almost never used.

- Make certain numbers or letters are correct. Otherwise, B might mean the second option.

If you need more options, it's possible to add another prompt to get more detail. For example, when the first prompt (question shown earlier) responds with "B. Subscriptions and billing”, our following options might include "Upgrade plan”, "Downgrade plan”, "Cancel subscription”, and so on.

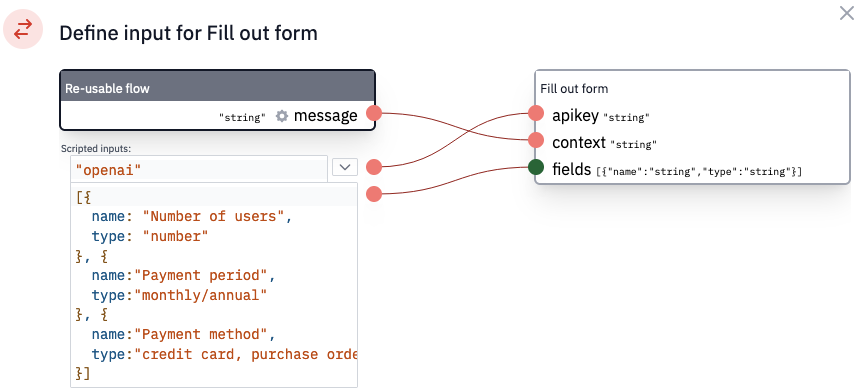

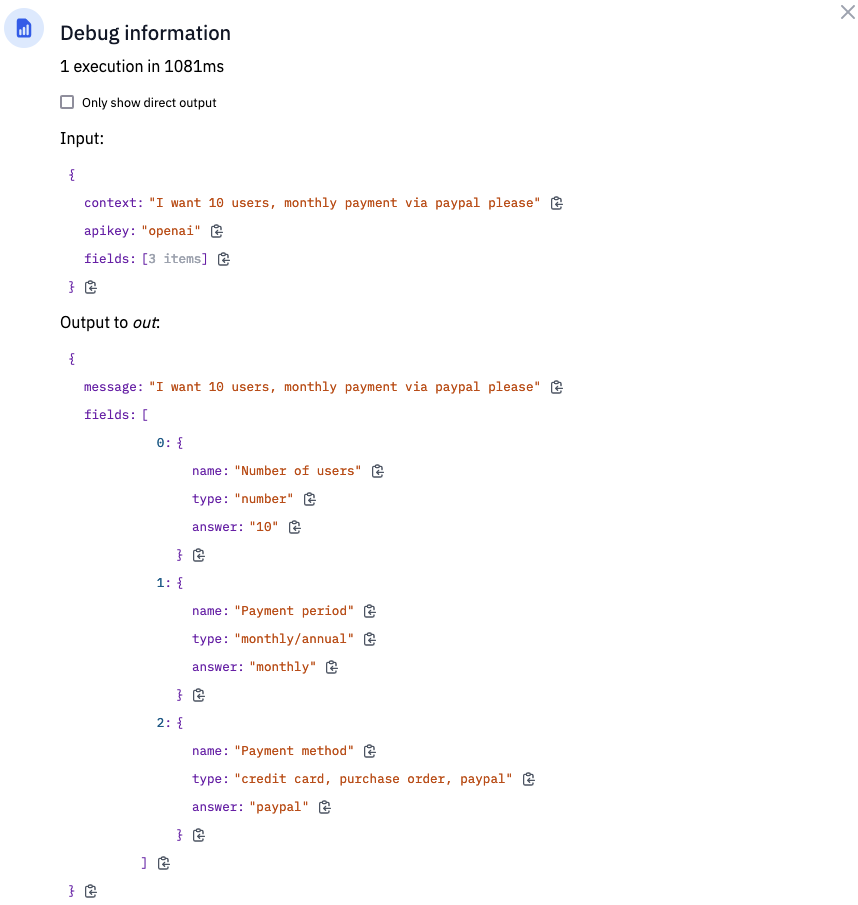

The next step is to collect the information for the form. We've tried the following setup:

Hi, I would like to purchase a 1-year plan for 5 users. how can I get the license code, and what payment options do you have?Answer the questions.

- Number of users (number)

- Payment period (monthly/annual)

- Payment method (credit card, purchase order, paypal)Number of users: 5

Payment period: Annual

Payment method: Credit card

The input ended after "Number of users:”. The questions are repeated, and answers are given in the right format as we intended. However, the response to the payment method makes one issue apparent. It just makes a random guess at the payment method. It will never leave the field empty or use terms like "not given”. We've tried to add "not specified” as an option in the question. That works sometimes but is still not very likely to be chosen. Therefore, it's crucial to always check the answers with the user.

Getting started on the OpenAI playground

If you're interested in building a chatbot with OpenAI, you'll first need to sign up for an account on the OpenAI Playground. This online tool allows you to experiment with GPT-3 and its different models and settings. You may start with the examples in this article and find out what questions GPT-3 can answer for your use case.

To integrate the model into other tools, you need an API key which you can get on your OpenAI profile. You can use that key in the Authorization header and start making API requests to OpenAI.

Using GPT-3 in Flowlet

The "OpenAI GPT-3” package in the Flowlet templates provides two blocks that implement the prompt design. One to answer questions and one to fill out forms. These blocks take care of formatting the prompt design and parsing its result. You only need to add the OpenAI API key in Flowlet secrets (under settings) and provide it as input to the block, together with your query.

The provided fields are amended with the answers from OpenAI.

Want to solve your API integration issues using Flowlet?

- Join the waitlist for the private beta

- Follow us on Medium, Twitter, or LinkedIn